Midjourney: Where Imagination Meets Intelligence

A deep dive into Midjourney, the AI that transforms language into art. Learn how versions, tools, and prompting techniques can elevate your creative workflow.

🎨 What Is Midjourney?

Midjourney is not just a tool. It is a new way to think visually.

It translates words into images. Fast. Strange. Poetic. Precise. Midjourney does not replicate what already exists. It uncovers what has yet to be seen.

Launched in 2022 by David Holz as part of Midjourney, Inc., it stands apart from other AI platforms because of how it sees. Rather than simply analyzing data, it interprets mood, style, and suggestion. This makes it a powerful companion for designers, architects, and creatives of all kinds.

You prompt it. It responds with a scene, a tone, a world.

🎨 Why Midjourney Matters for Designers

🌌 Mood over Mimicry

Midjourney doesn’t just copy. It captures emotion.

Most AI tools focus on surface-level precision: textures, proportions, photorealism. Midjourney moves differently. It leans into emotional design. It's not chasing accuracy. It's chasing feeling.

You’re not asking it to paint what you see. You’re asking it to express what you sense.

That’s what sets Midjourney apart in the world of AI design. Its strength is in building atmosphere, not perfection. The shadows, the colors, the balance come together to create a vibe, not a diagram.

Ask it for “a lonely street in neon fog.” It won’t just return streetlights and smoke. It gives you longing. It gives you quiet. You feel something. You’re not always sure why, but it lands.

That’s the power of digital aesthetics guided by emotion, not rules.

If you’re shaping a visual identity, this matters. Replicas rarely move people. Mood does.

Midjourney understands that. And it creates accordingly.

⚡ Speed with Exploration

Midjourney accelerates the creative process. It allows rapid testing of visual ideas without getting stuck in the technical grind.

Designers can move through styles, moods, mediums, and aesthetics in minutes. What once took days of sketching or building visual drafts can now happen in a single afternoon.

Want to try Brutalist typography on a soft pastel palette? Done. Curious how surrealism blends with minimalism? Generate it, tweak it, compare. Midjourney makes experimentation fast, fluid, and fun.

This speed unlocks better decisions. You’re not guessing. You’re seeing. And because it’s instant, you’re more likely to explore bold or unusual directions.

It’s not about skipping the craft. It’s about freeing up the craft. The tool handles the grunt work so your focus stays on form, emotion, and impact.

In the world of AI design, that kind of agility is gold.

🌱 Seed for Creation

Midjourney is not the final product. It’s the spark.

It provides direction, mood, and momentum. It gives you something to react to. A form, a color story, a concept you hadn’t considered.

But the refinement? That’s still yours.

Whether you're an architect exploring spatial mood, a stylist visualizing looks, or a concept artist shaping worlds, Midjourney offers a place to start. It’s a seed, not a solution.

You guide the vision. You decide what stays, what changes, and what evolves. The tool can inspire, but it doesn't design with intention. Only humans do that.

In the space of AI design, this distinction matters. Creativity isn't just output. It's choice. It's emotion. It's knowing why something feels right.

Midjourney gets the idea moving. You make it meaningful.

Its value grows when guided with clarity. Without human intention, it produces surfaces. With human direction, it creates resonance.

🧬 The Beginning: Imagination as Technology

David Holz, who previously co-founded Leap Motion, had already explored the human-machine interface. With Midjourney, he moved from interaction to imagination. He built a machine that doesn’t just respond. It dreams.

Midjourney launched in July 2022, not through a polished website, but inside Discord. That decision set it apart from other AI platforms.

While other AI platforms focused on clean dashboards and tidy UX, Midjourney embraced creative chaos. It was raw, fast, and public. You saw what others made in real time. They saw yours. Feedback wasn’t hidden. It was alive.

Creation became a kind of performance. Each prompt felt like a live experiment. The room buzzed with energy.

That momentum pulled people in. Architects, illustrators, fashion stylists, UI designers, and storytellers all joined the stream. Midjourney blurred the line between user and audience. You were never designing alone.

Over time, the platform evolved. It added structure, better workflows, and refined outputs.

But it never lost its original spark. Midjourney still feels like imagination as technology, shared and electric.

🧠 Why Midjourney Isn’t Just Another Generator

Most tools in the AI space focus on speed. Midjourney focuses on style.

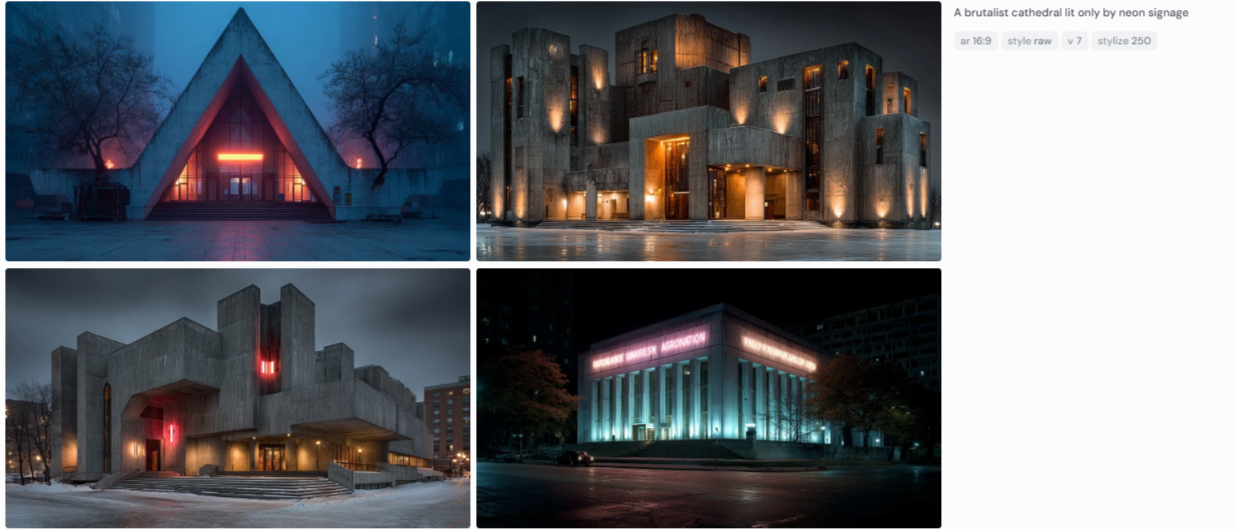

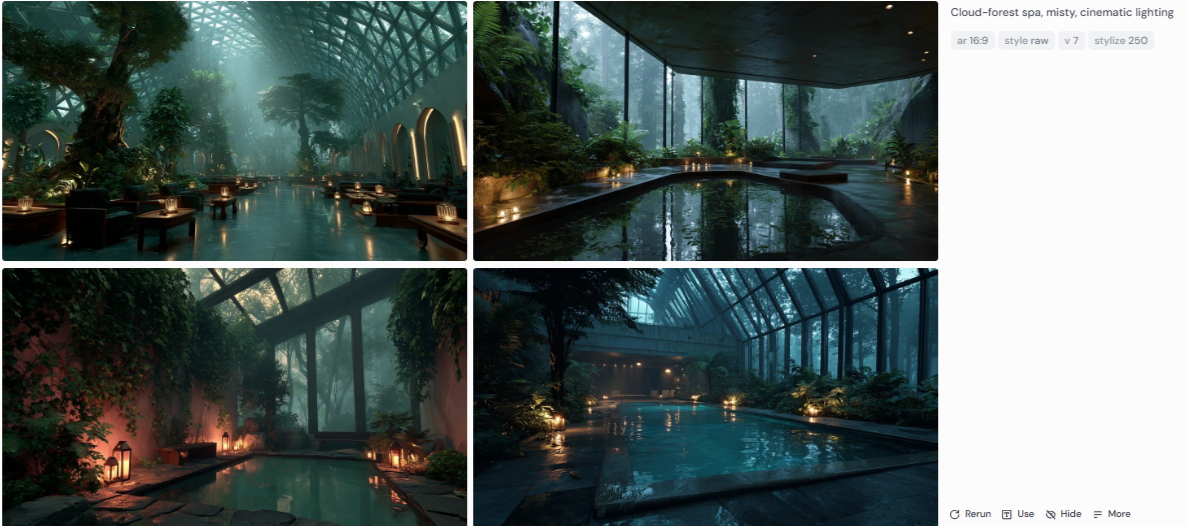

It doesn’t just follow commands. It listens for rhythm. For visual tone. A prompt like:

- “Retro-futuristic desert resort at dawn”

- “A brutalist cathedral lit only by neon signage”

- “Cloud-forest spa, misty, cinematic lighting”

...yields results that don’t just look good—they feel good. The system doesn’t understand emotion. But it understands how emotion looks.

It creates art not by copying—but by remixing the edge of what’s possible.

🎨 Read the post: Designing Emotion for a deeper dive into AI’s relationship with poetic intent and feeling.

📅 Midjourney Versions, at a Glance

Midjourney has evolved from raw output to refined artistry. Each version deepens how prompts are understood and how images are composed. The result is a tool that feels more like visual intuition than code.

🌀 v1 (February 2022)

The origin phase. Outputs were surreal, dreamlike, and unpredictable. Strange faces, warped limbs, and abstract environments filled the grids. It felt chaotic, but the creative potential was impossible to miss.

In these early days, Midjourney revealed the raw edge of AI design. Messy. Fresh. Full of possibility. It was a spark for what would become a new visual language.

Midjourney v1 launched in February 2022, as noted on Wikipedia, marking the beginning of its rapid evolution.

🧱 v2 (April 2022)

Structure began to take shape. Prompts yielded more stable forms. Faces became recognizable. Lines grew cleaner. Though still experimental, this version moved closer to visual identity with intention.

Midjourney v2 arrived in April 2022, refining how the system interpreted language and forming output that felt more controlled and directional. The release date is confirmed on Wikipedia.

With v2, designers caught glimpses of what was possible, not just AI making pictures but a machine learning to honor creative intent.

🔍 v3 (July 2022)

This version marked a real shift. Compositions became clearer. Anatomy looked more natural. Lighting improved across the board. The images had structure. Designers could test ideas and build from the results with more confidence.

Midjourney v3 became the default model starting in July 2022, as confirmed by Wikipedia. It remained active until the release of v4 later that year.

With v3, Midjourney moved deeper into practical use. The images were no longer just experiments. They were usable drafts. This version pushed AI design into new territory, where speed met craft and where inspiration became a launchpad for real creative work.

🧠 v4 (November 2022)

This version was built from the ground up. A new model architecture brought major gains in realism. You began to see clean geometry, richer textures, and expressive human features. The visuals felt purposeful. It marked a shift deeper into digital aesthetics.

Midjourney v4 launched on November 5, 2022, as noted on Wikipedia. This update pushed the tool toward use in real creative workflows.

🎥 v5 (March 2023)

Cinematic and soft, v5 introduced photoreal textures and smoother gradients. Natural lighting became a signature. Skin tones looked more believable. Faces gained depth. With mood and story merged in a single frame, this version made images that felt alive.

The alpha iteration of v5 was released March 15, 2023, per Wikipedia.

✒️ v5.1 (May 2023)

Sharper and more tuned, v5.1 improved prompt accuracy and added control. Designers used it for editorial layouts, fashion boards, and minimal design. It leaned into a clean, intentional style.

Version 5.1 was released May 4, 2023, according to Wikipedia.

🎭 v5.2 (June 2023)

This version embraced drama and variation. The “High Variation Mode” enabled fast idea exploration. The outputs became richer, moodier, more stylized. Artists used it for concept work, moodboards, and pushing boundaries.

Wish to try “zoom out” or expand scenes? v5.2 added that too. It launched in June 2023, per Wikipedia.

🚀 v6 (December 2023)

A technical leap. Hands became usable. Faces grew steadier. Perspective aligned more naturally. Prompts became more literal and precise.

Midjourney released v6 on December 21, 2023, per Wikipedia. This version raised the bar for visual identity and AI design fidelity.

🧬 v6.1 (Early 2024)

A refined upgrade to v6. Edges became tighter. Consistency improved across compositions. Layout control responded better to detailed prompts. Even the smallest elements held up under close inspection.

This version is ideal for designers working on full brand systems, product mockups, or highly polished visual campaigns.

While the release date for v6.1 is not listed as a separate entry, it builds directly on v6, which launched in December 2023, as confirmed by Wikipedia.

🌐 v7 (Mid 2024)

The most advanced model so far. Available exclusively via web UI. It brings fuller context understanding. It handles poetic or multi-layered prompts with better clarity. Lighting feels natural, faces and props align, scenes feel coherent.

According to Wikipedia, version 7 builds on all prior gains and refines the synergy between style, prompt, and intention.

🎨 Series (Anime and Illustration)

Midjourney’s Niji models, built in partnership with Spellbrush, are made for creators working in character design, anime aesthetics, and narrative storytelling. These models are optimized for expressive poses, dramatic framing, and emotional clarity, especially in visually rich, story-driven worlds.

✏️ Niji 5

Released in early 2023, Niji 5 focused on anime tropes and dynamic posing. It was designed for stylized expression, from bold gestures to iconic character styling. Perfect for character sheets, fantasy scenes, and visual novels, it gave creators more control over personality and mood.

🌟 Niji 6 (Current)

Niji 6 launched on June 7, 2024, and brought a dramatic leap in rendering quality. Costume textures became more detailed. Lighting grew more vivid. Composition became more cinematic.

This version excels at comics, magical realism, and narrative illustration. It supports layered emotion and visual storytelling that feels alive on the page.

🧩 Which Version Should You Use?

- v7 for the most intelligent and nuanced rendering across all domains

- v6.1 for stable, polished workflows in production settings

- v5.2 for fast experimentation and stylized exploration

- Niji 6 when your focus is anime aesthetics, narrative concept art, or expressive character-driven work

Midjourney is no longer just a tool that generates images. It interprets your intention. Each version opens a new kind of conversation between creator and machine, one shaped by mood, meaning, and visual rhythm.

💬 Discord vs Web UI: Two Interfaces, One Vision

Midjourney began inside Discord, and for over two years it remained the only way to use the platform. It was fast. Open. Loud. Users typed /imagine prompts in public channels, generating images in real time alongside thousands of others. The experience was collaborative, chaotic, and deeply creative. You learned by observing others. You improved by iterating in public. It felt like a live, always-on design lab powered by AI.

But Discord had natural limitations. Conversations and images were scattered across channels. Threads grew dense. Commands needed memorization. There was little room for focus or private workflows.

That changed in August 2024, when Midjourney launched its full-featured Web UI alongside version 6.1, consolidating all functionality into one clean, intuitive interface. Image generation, model selection, remixing, and archive management became seamless and accessible. According to Wikipedia, this shift marked a major step toward broader usability beyond Discord. UI

Prompting

- Discord: Type

/imaginein a bot channel. - Web UI: Type into a visual prompt bar with integrated settings for model version, aspect ratio, and stylization.

Community Experience

- Discord: Public by default. Everyone sees generations in real time.

- Web UI: Private by default. Designed for individual workflows and focused outputs.

Image Navigation

- Discord: Scroll through threads or user history in chat.

- Web UI: Use a searchable, filterable gallery. Browse by model, prompt, or time.

Remixing and Editing

- Discord: Use slash commands or emoji reactions (e.g. variations, upscaling).

- Web UI: Click to remix, regenerate, upscale, or favorite. Actions are visual and centralized.

Project Workflow

- Discord: Best for experimentation and community learning.

- Web UI: Ideal for production, iteration, and professional asset delivery.

Midjourney didn’t abandon Discord. It evolved beyond it.

Both environments serve different creative needs.

Discord is a studio floor, buzzing with collective energy.

Web UI is a personal workspace, built for clarity, iteration, and intent.

Together, they support both sides of the modern creative mind:

The explorer, who thrives in chaos,

And the editor, who sharpens ideas into results.

🛠 What Midjourney Solves for Creatives

Creative work begins in uncertainty.

You feel an idea. A theme. A rough picture in your mind. Translating that into paper or pixels once took time. Sketches, moodboards, endless revisions. The process was slow, and the spark often faded before the form appeared.

Midjourney changes that. It closes the gap between concept and image. It gives shape to the invisible.

You can explore:

- Moodboard ideas for a brand or campaign

- Lighting and texture studies that test atmosphere

- Environments for a story, a product, or an interior space

What used to take hours now takes minutes. And you don’t just get one image. You get variations, angles, and unexpected outcomes that push your thinking further.

Midjourney doesn’t erase craft. It expands it. It gives you more room for decision-making, for choosing what resonates, and for shaping a stronger visual identity.

In the world of AI design, this is the difference between waiting for inspiration and working directly with it. Midjourney makes the creative process faster, more fluid, and more alive.

🎯 The Real Skill: Not Prompting, But Directing

Writing a prompt is easy.

Knowing why you write it is harder.

Midjourney isn’t about throwing random words at a machine. It is about vision. A great designer does not just generate images. They guide them. They refine them. They notice when something feels wrong, and they know when it finally feels right.

That is the difference between a casual user and a true visual thinker.

Midjourney is the engine. But taste is the driver. The tool can shape pixels, but only you can decide what carries meaning. Only you can sense what belongs in a visual identity, what strengthens a brand story, or what resonates in digital aesthetics.

This is the real skill of AI design: not typing prompts, but directing them. Not asking for images, but shaping intention. The stronger your vision, the stronger Midjourney becomes as your creative partner.

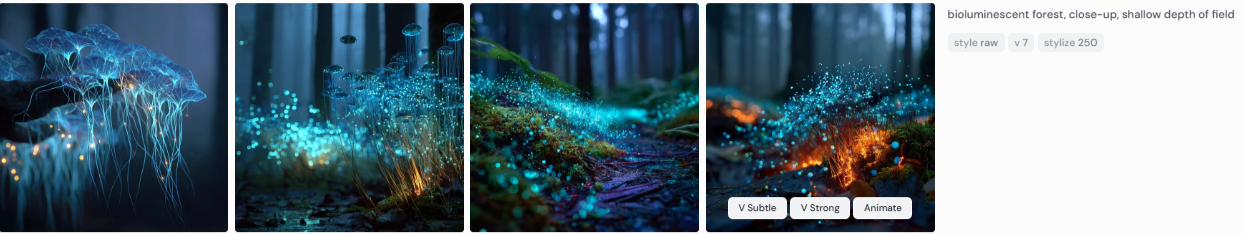

✏️ How Prompting Works in Midjourney

A Midjourney image begins with a single thing: a prompt.

But not all prompts are equal.

The most effective ones use clear nouns, strong adjectives, and sometimes even camera language. Instead of writing a paragraph, think in keywords:

- “bioluminescent forest, close-up, shallow depth of field”

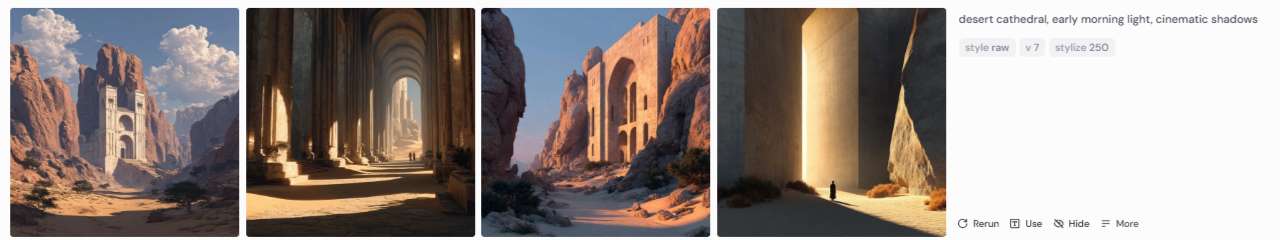

- “desert cathedral, early morning light, cinematic shadows”

Midjourney reads these as cues. It doesn’t interpret your meaning—it matches visual patterns to your language.

Prompt Tip: Place the most important concept first. If you want “paper sculpture” to define your image, say it early. Prompts are read left to right, just like you’d scan a headline.

🧩 Modifying Your Creations

Midjourney is not only about generating fresh images. It is also about evolving them. The platform gives you tools to refine, reshape, and extend your ideas without starting over.

Once you create an image, you can:

- Upscale: Enhance the resolution and surface detail so your concept looks polished and production-ready.

- Vary: Spin off four new interpretations of the original, each carrying a slightly different perspective or mood.

- Remix: Adjust your prompt, swap styles, or tweak parameters midway, letting the design grow in unexpected ways.

- Pan: Extend the canvas to the left, right, up, or down while maintaining visual continuity. This is perfect for environments, landscapes, and immersive storytelling.

These tools matter when you are shaping a story, refining a visual identity, or building a set of cohesive designs. You are not starting from scratch. You are evolving what already works.

Think of this as art direction in motion. One step at a time, Midjourney lets you push the boundaries of your concept. Each iteration carries you closer to an image that feels intentional, resonant, and true to your vision.

📐 Aspect Ratios and Composition

Every image you create in Midjourney lives inside a frame. That frame is the aspect ratio, and it does more than define size. It sets mood. It shapes story. It determines how a viewer feels the moment they see the work.

Common aspect ratios include:

- 1:1 (square) → Balanced and centered. Ideal for product visuals, profile graphics, or clean social media posts.

- 3:2 (landscape) → Cinematic and narrative-driven. This ratio stretches space and pulls the eye across a scene, perfect for environments or storyboards.

- 9:16 (portrait) → Vertical and bold. Designed for mobile-first platforms, fashion shoots, and editorial-style storytelling.

By default, Midjourney outputs images in 1:1, but shifting the ratio can shift the emotion entirely. A wide format emphasizes space, scale, and atmosphere. A tall format highlights detail, elegance, and focus.

Aspect ratio is one of the most overlooked creative levers in AI design. It is not just a technical setting. It is a way to direct rhythm, balance, and energy in your digital aesthetics.

🌀 Read the post: Form Meets Feeling to see how machines interpret aesthetics beyond rules.

Use aspect ratios intentionally. The choice of frame can make the difference between a design that is functional and one that feels unforgettable.

🖼️ Image Size and Resolution

Midjourney does not speak in pixels. It speaks in ratios, clarity, and intent.

Still, image size matters when you are creating for print, portfolios, or client-ready work. A concept draft is one thing. A polished presentation is another. Knowing the difference saves time, keeps quality high, and protects your visual identity.

🧾 Standard Images

These are the default outputs.

They are perfect for:

- Drafting quick concepts

- Building moodboards

- Fast creative play

Standard images are light and flexible. They are excellent for experimentation but often lack the depth or resolution needed for professional print work.

🔍 Upscaled Images

Upscaling sharpens the story. It adds clarity, depth, and polish.

When you upscale, you gain:

- Texture and fine detail

- Clean edges

- Visual strength that holds up in presentations, mockups, and client decks

Use upscaling when you move from AI design exploration to delivery. Think branding visuals, book covers, product presentations, and display screens. Upscaling turns a sketch into something closer to digital aesthetics ready for an audience.

↗️ Zoom and Pan Tools

Sometimes a frame is not enough. Midjourney’s zoom and pan tools let you grow beyond the edges.

You can:

- Expand the frame without losing tone

- Tell a longer story in one scene

- Explore new angles and perspectives

This is ideal for:

- Worldbuilding in narrative projects

- UI/UX storytelling with extended screens

- Cinematic sequences that need flow from frame to frame

Zoom and pan are not just tools. They are ways to stretch imagination without breaking continuity.

🎯 Tip for Better Results

Start simple. Do not overload your first prompt. Let Midjourney breathe. Then upscale with purpose.

This workflow avoids visual artifacts and delivers cleaner, stronger results. The fewer distractions you introduce early, the better the foundation for refinement later.

📱 Mobile Note

Upscaled images are heavier. They look stunning but can slow down performance on smaller screens. Always optimize for the web with compressed formats such as JPG or WebP. This keeps your visuals fast, beautiful, and mobile-friendly.

🌀 Emotion, Interpreted by Code

AI doesn’t feel. But we do.

And that’s where its creative power begins.

Midjourney doesn’t understand longing, nostalgia, or wonder. But it understands what those things look like—because it has seen them in countless images, styles, and compositions.

When you prompt it with something like:

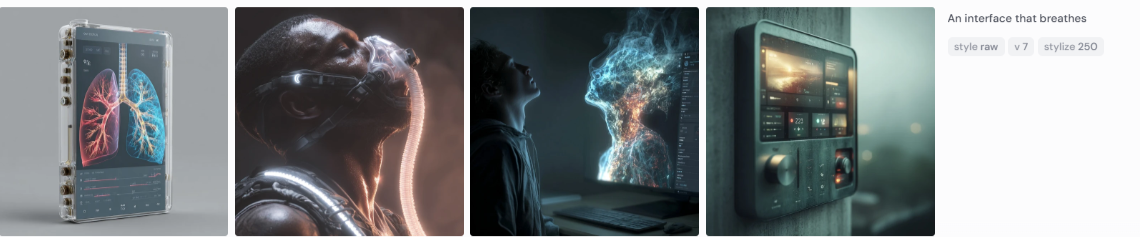

- “An interface that breathes”

- “An outfit that looks like a question”

- “A room that feels like memory”

…it doesn’t grasp the metaphor. But it reaches for visual patterns that match what those words tend to mean to us.

What do we see?

For “an interface that breathes”, it generates organic UI with lit panels, soft-glow elements, fluid shadows, and anatomical motifs. It recalls breath not literally, but through motion and atmosphere.

For “an outfit that looks like a question”, Midjourney plays with form. Question marks appear on garments. Structure becomes offbeat, with unbuttoned layers, mismatched textures, or pieces that interrupt the silhouette. There is curiosity and hesitation in the design, like fashion holding back an answer.

And for “a room that feels like memory”, it constructs warm, cluttered interiors with vintage colors, faded walls, and natural light filtered through old glass. Details like rotary phones, patterned rugs, and photo-covered walls speak to nostalgia, even without knowing the story behind them.

This isn’t emotion felt by a machine. It’s emotion reconstructed from data.

The machine doesn’t know what memory feels like. But it recognizes its visual signature, and delivers something that resonates with us.

Midjourney doesn’t make art from emotion. It makes art for emotion.

The meaning isn’t in the machine.

It’s in the space between your prompt and your perception of the image it returns.

🔎 Read the post: The Shape of Intelligence explores how human intention gives AI visuals clarity and emotion.

🔮 What’s Next: Designing with Intelligence

The creative process is no longer linear.

It is not simply brief, sketch, revision, final. Today, the process moves in loops. Fast loops. You generate. You discard. You remix. You refine.

The designer of the future is not the one who draws faster. It is the one who decides faster. The one who knows what to keep, what to reshape, and when to move on.

Midjourney accelerates this rhythm. It does not replace the human process. It compresses it. It gives you more feedback in less time. The faster you see your ideas, the faster you see what works.

This changes the role of the creative mind. Instead of waiting for inspiration to appear, you are working with inspiration in real time. AI design becomes a partner, not a shortcut.

The future belongs to visual thinkers who can direct the flow. Who can see in a grid of images not just options, but meaning. Who can sense which variation belongs to a story, a brand, or a mood.

Midjourney is not the end of creativity. It is the amplifier. The tool that takes the spark of imagination and multiplies it into forms you can test, compare, and shape into a lasting visual identity.

Final Thought

Midjourney is not the destination. It is the departure point.

It hands you raw material, but the meaning comes from you. The images are only the beginning. What matters is how you shape them, where you take them, and what they ultimately say.

Creativity has not disappeared. What has changed is the lens we use to see it. Technology does not replace vision. It sharpens it. Every render is both a solution and a question. It pushes you to refine, to adjust, to imagine again.

In Midjourney’s hands, imagination becomes visible. In your hands, it becomes unforgettable. That is the quiet revolution. One frame, one iteration, one decision at a time.

This is the future of AI design and digital aesthetics. Not machine replacing maker, but machine expanding the canvas. Together they create a dialogue. Together they push the boundaries of what a visual identity can be.

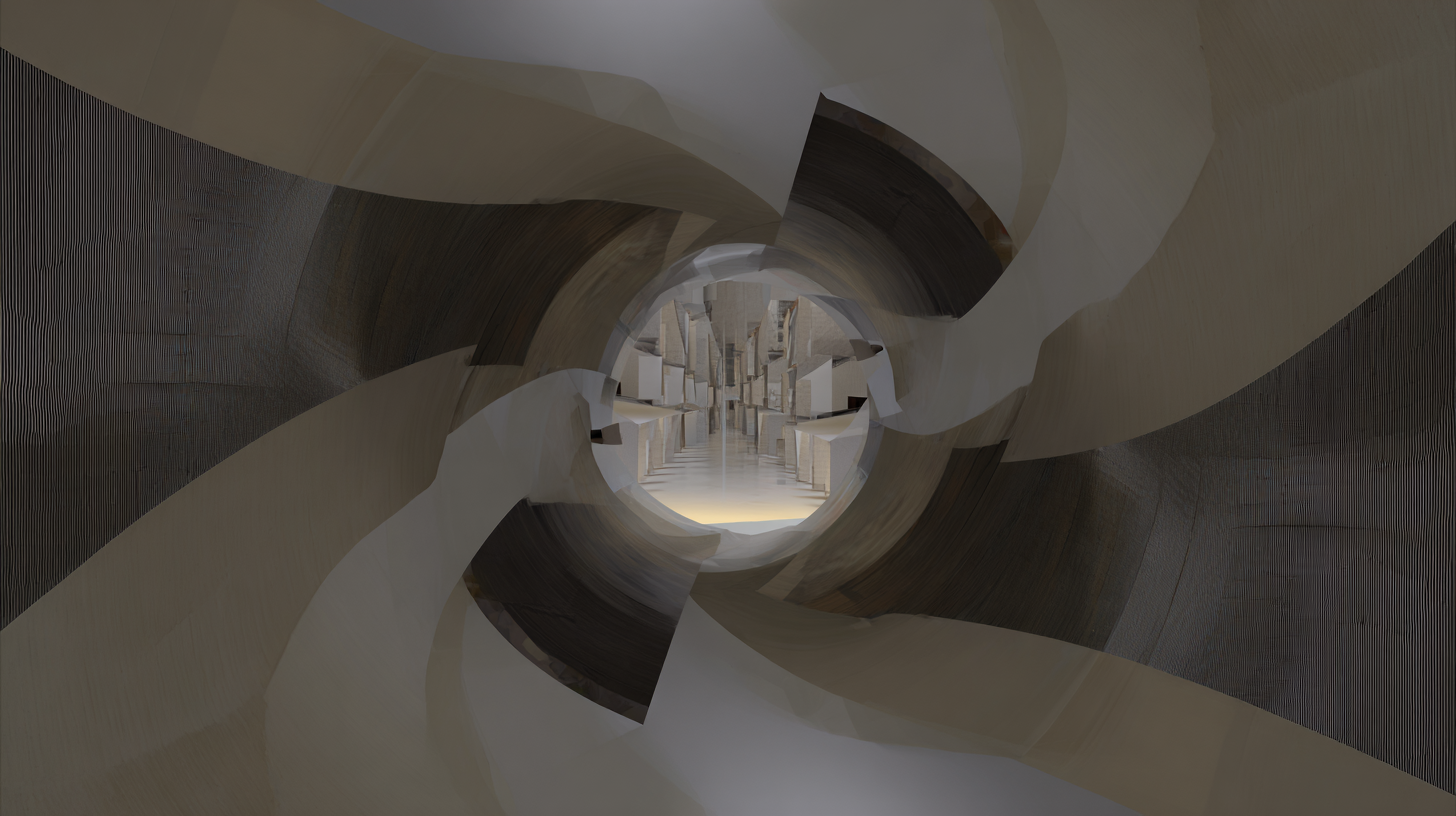

🖼 Note on Visuals

All architectural renderings in this article were created using Midjourney AI. Each image blends algorithmic precision with artistic intention. They showcase the evolving partnership between human creativity and machine intelligence, a partnership where art is no longer just imagined but accelerated.

✨ Want to Turn Vision Into Visual?

If you're ready to explore Midjourney with clarity and purpose, I offer tailored creative services that transform abstract ideas into vivid, artful outcomes. Fast. Effective. Beautiful.

Here’s how we can work together:

🛋️ Stunning Midjourney AI Interior Designs

Custom-designed interiors with intention. Lighting, textures, style, and spatial mood come together to tell a story.

🏛️ Architectural Visuals for Architects

From minimalism to maximalist fantasies. Visualize exteriors, interiors, and urban or rural concept environments with precision.

🎨 Custom AI Moodboards and Concept Visuals

Fashion styling, UI/UX design, and visual concept art. Crafted moodboards that shape direction, spark decisions, and tell a visual story.

📚 Whimsical Storybook Covers

Playful and poetic cover designs powered by Midjourney’s magic. Ideal for books, zines, and digital products with soul.

🎯 Work with me on Fiverr

Your next visual direction deserves more than just prompts. It needs interpretation, refinement, and vision. Let’s create something unforgettable.